📖 Rust Engine Replacement Model#

⏱ | words

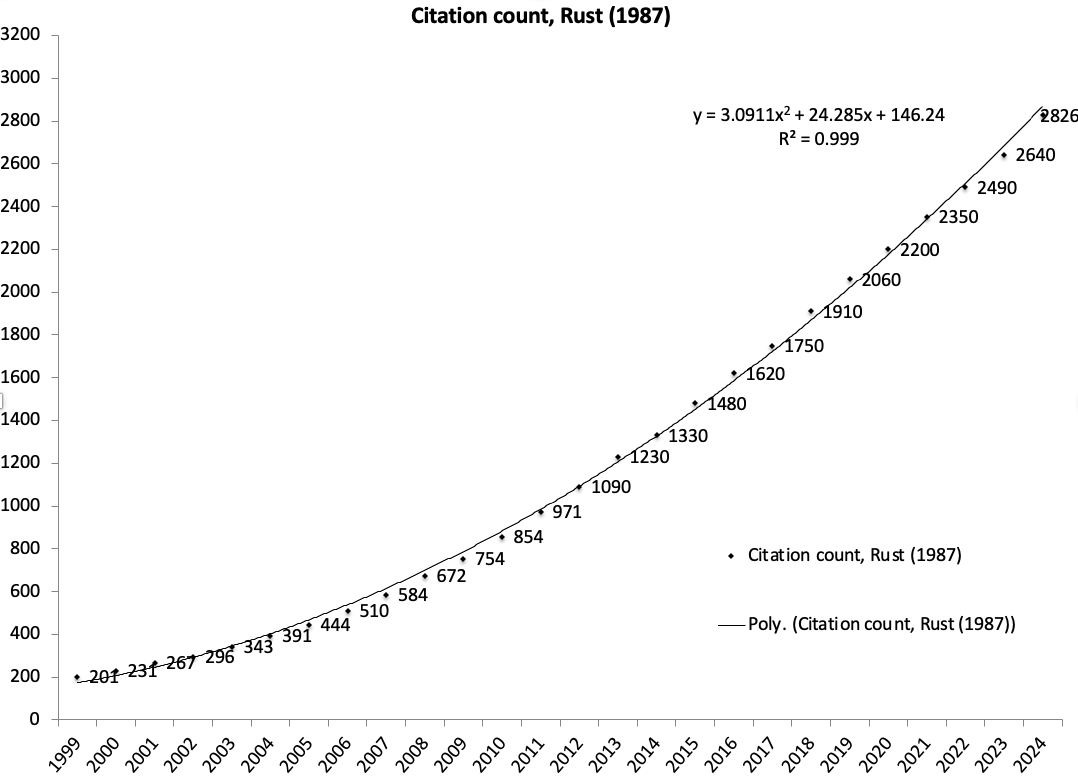

Rust (1987) Empirical Model of Harold Zurcher#

📖 Rust [1987], Econometrica “Optimal Replacement of GMC Bus Engines: An Empirical Model of Harold Zurcher”

Foundational paper in dynamic structural econometrics

Develops the framework used extensively in many fields today

Ingredients:

Simple dynamic model: binary discrete state regenerative optimal stopping problem

Smart solution method: polyalgorithm with fast Newton-Kantorovich iterations

Coherent econometrics specification: unobserved states ensuring non-degenerate likelihood

New maximum likelihood estimator: nested fixed point (NFXP) estimator

Very real application with actual data collected by a real person named Harold Zurcher

Dynamics of citation count of Rust (1987) Zurcher paper

📚 Who cares about Harold Zurcher?#

Occupational Choice (Keane and Wolpin, JPE 1997)

Retirement (Rust and Phelan, ECMA 1997)

Brand choice and advertising (Erdem and Keane, MaScience 1996)

Choice of college major (Arcidiacono, JoE 2004)

Individual migration decisions (Kennan and Walker, ECMA 2011)

High school attendance and work decisions (Eckstein and Wolpin, ECMA 1999)

Sales and dynamics of consumer inventory behavior (Hendel and Nevo, ECMA 2006)

Advertising, learning, and consumer choice in experience good markets (Ackerberg, IER 2003)

Route choice models (Fosgerau et al, Transp. Res. B)

Fertility and labor supply decisions (Francesconi, JoLE 2002)

Residential and Work-location choice (Buchinsky et al, ECMA 2015)

Equilibrium Allocations Under Alternative Waitlist Designs: Evidence From Deceased Donor Kidneys (Argarwal et al, ECMA 2021)

Equilibrium Trade in Automobiles (Gillingham, Iskhakov, Munk-Nielsen, Rus, Schjerning, JPE 2022)

…and many more

To this day NFXP is very powerful method for both solving and estimating dynamic discrete choice models

📖 Iskhakov et al. [2016] (Econometrica) “Comment on “Constrained Optimization Approaches to Estimation of Structural Models”” – we show that with proper implementation Rust’s method is as powerful as modern general solvers unleashed at the similar problem today

Components of the dynamic model#

State variables — vector of variables that describe all relevant information about the modeled decision process, \(x_t\)

Decision variables — vector of variables describing the choices, \(d_t\)

Instantaneous payoff — utility function, \(u(x_t,d_t)\), with time separable discounted utility

Motion rules — agent’s beliefs of how state variable evolve through time, conditional on choices, \(x_{t+1} \sim f(x_t,d_t)\)

Value function — maximum attainable utility \(V(x_t)\)

Policy function — mapping from state space to action space \(\delta : x_t \mapsto d^{\star}(x_t)\) that returns the optimal choice

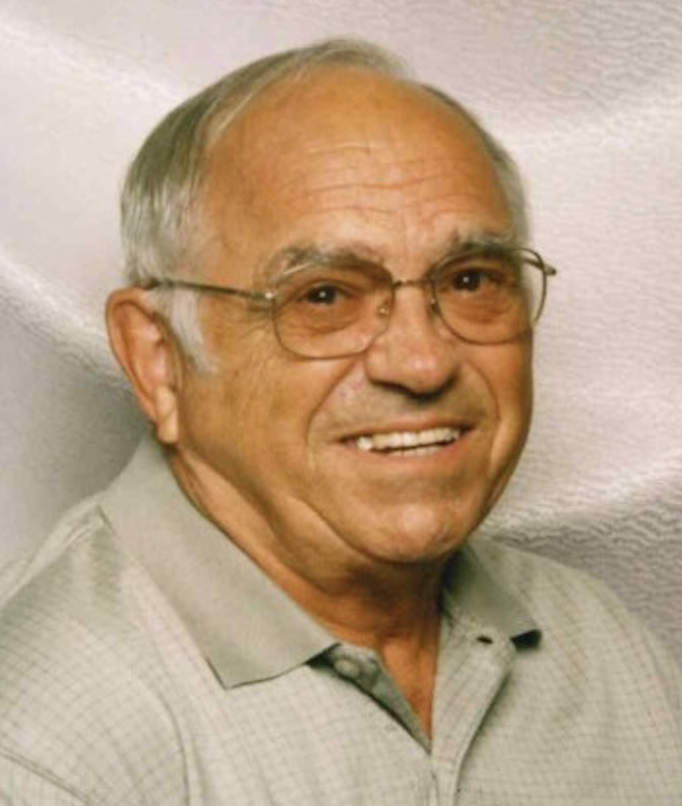

Harold Zurcher engine replacement problem#

Superintendent of the Madison, Wisconsin bus company

June 16, 1926 - June 21, 2020

States and choices#

choice set: \(\{ \text{keep} , \text{replace} \} = \{0,1\}\)

Each bus comes in for repair once a month and Zurcher chooses between ordinary maintenance \((d_{t}=0)\) and overhaul/engine replacement \((d_{t}=1)\).

state space: mileage at time t since last engine overhaul \(x_t\)

Harold observes many different attributes of the buses which come into the shop, but we focus on the main one for now

Zurcher’s preferences#

Instantaneous payoffs are given by the cost function that depends on the choice

\(RC\) = replacement cost

\(c(x,\theta_1)\) = cost of maintenance with preference parameters \(\theta_1\)

Motion rules#

First of all, mileage is continuous. How to deal with the continuous state space?

Rust discretized the range of travelled miles into \(n=175\) bins, indexed with \(i\), \(\hat{X} = \{\hat{x}_1,...,\hat{x}_n\}\) with \(\hat{x}_1=0\)

Mileage transition probability: for \(j = 0,...,J\)

Mileage in the next period \(x'\) can move up at most \(J\) grid points

\(J\) is determined from the observed distribution of mileage

Effectively, the probabilities of increase of mileage from any existing mileage are the same

If not replacing (\(d=0)\)

If replacing (\(d=1)\)

Dynamic optimization and Bellman equation#

To minimize the discounted expected value of the costs, Zurcher should find such policy function \(\delta(x_t):X\rightarrow \{\text{keep},\text{replace}\}\) such that \(d_t=\delta(x_t)\) maximizes

The function \(V(x_t)\) denotes the maximum attainable value at period t

where \(\Pi\) is a space of policy functions \(\delta(x_t):X\rightarrow \{\text{keep},\text{replace}\}\), and \(d_t = \delta(x_t)\)

Using Bellman Principle of Optimality, we can show that the value function \(V(x_t)\) constitutes the solution of the following functional equation

where expectation is taken over the next period values of state \(x'\) given the motion rule of the problem

Bellman operator#

The above Bellman equation can be written as a fixed point equation of the Bellman operator in the functional space

The Bellman equations is then \(V(x) = T(V)(x)\), with the solution given by the fixed point \(T(V) = V\)

Classification of the Zurcher model: Finite or infinite horizon? Discrete or continuous choice? Discrete or continuous states? Suitable solution methods?

Making the model suitable for empirical work#

Remember that Zurcher observes many different attributes of the busses that come into the shop

But we as an econometrician do not!

Yet, these are likely to be the reason for observing different behavior in same states (for the same observed mileage)

Need to include error terms into the model, denote \(\epsilon\)

Back to the RUM framework of \(u(d,x)+\epsilon(d)\) but now in dynamic setting

Should the error term be part of the state space of the problem?

Updating the Bellman equation#

\(\varepsilon\) is a new (vector) state variable

where \(\varepsilon_d\) is the component of vector \(\varepsilon \in \mathbb{R}^2\) which corresponds to \(d\)

Rust assumptions#

(AS) Additive separability in preferences

same as in the static RUM models

(CI) Conditional independence

in addition to standard independence assumptions of static RUM

Error terms are independent across observations due to random sampling

Error terms come as vectors, one for each decision, and are independent across choices

And additional assumption for dynamic models: conditional on \(x\), there is no serial correlation in error terms across time

(EV) Extreme value Type I (EV1) distribution of \(\varepsilon\)

making things simpler by providing closed form expressions for choice probabilities

can be easily relaxed to GEV, and with the trouble of multidimensional integration to other continuous distribution with full support

What Rust assumptions allow:#

Separate out the deterministic part of choice specific value function \(v(x,d)\) (SA)

Compute the expectation by part (CI)

Use max-stability of EV1 to compute expectation w.r.t. \(\varepsilon'\) (EV)

Bellman equation and Bellman operator in expected value function space#

Let \(\mathbb{E}\big[ V(x',\varepsilon')\big|x,d\big] = EV(x,d)\), then

this is Bellman equation in expected value function space

when the state space is discrete the integral is, of course, a simple sum over future values

Writing all together we have

and can define the operator \(T^*\) in the expected value function space

Solution to the Bellman functional equation \(EV(x,d)\) is also a fixed point of \(T^*\) operator, \(T^*(EV)(x,d)=EV(x,d)\)

\(T^*\) is also a contraction mapping in the space of expected value functions as shown by Ma and Stachurski [2021]

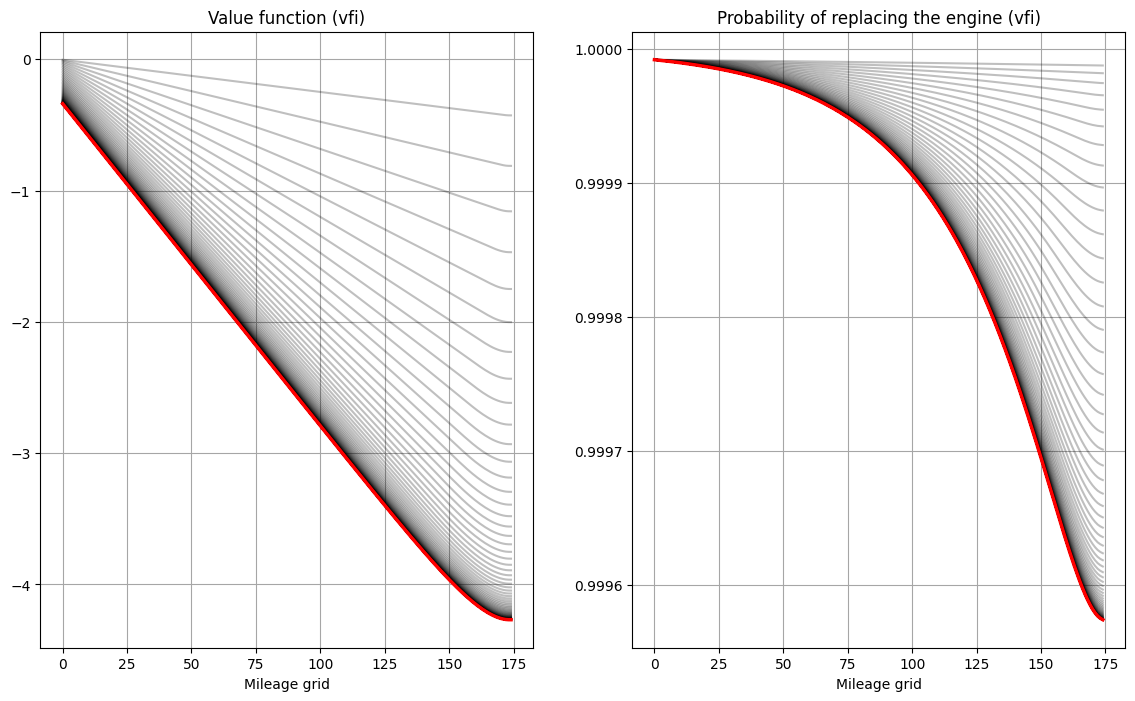

Contraction mapping \(\Rightarrow\) VFI is guaranteed to find the unique solution!

Dimentionality of this fixed point problem is smaller that the one in value function terms (because \(\varepsilon\) is not included)

It is also numerically easier to work with smooth expected values \(EV(x,d)\) rather than \(V(x,\varepsilon)\)

Later we’ll also see a very nice numerical optimization possibilities as well

Choice probabilities#

Once the fixed point is found, the optimal choice probability \(P(d|x)\) is given by the Logit structure (assumption EV):

The choice probability serve as the bases for forming the likelihood function. Will continue with this when talking about structural estimation!

NK iterations method#

Zurcher model has the following features:

infinite horizon

discretized mileage which is the only state (in EV formulation) = finite state space

discrete choice

idiosyncratic random components

Therefore the suitable solution methods are

value function iterations (VFI)

policy iterations video 43 in CompEcon course

Newton-Kantorovich method (NK iterations)

application of Newton method in functional spaces

numerically equivalent to policy iterations

The NK iteration is

The new operator is the difference between the identity operator \(I\) and Bellman operator \(\Gamma = T^*\)

\(\mathbb{0}\) is zero function

\(I-\Gamma'\) is a Fréchet derivative of the operator \(I-\Gamma\)

We work with finite approximations on the discrete state space \(\rightarrow\) vectors and matrixes!

let \(n\) denote the number of state points (in mileage)

\(EV(x,d)\) is given by a vector of length \(n\), assuming that the first element is reused to describe the expected value of replacing

\(T^*(EV)(x,d) = \Gamma(EV)(x,d)\) is a non-linear \(n\)-valued multivariate function of \(EV\)

Fréchet derivative \(I-\Gamma'\) is an \(n \times n\) matrix of first order derivatives of each output of \(T^*(EV)(x,d)\) w.r.t. each input

Therefore: NK iterations on finite approximations are similar to solving a system of \(n\) equations with \(n\) unknowns with Newton method!

Matrix expression for the finite approximation of the Bellman operator#

\(EV\) is a \(n \times 1\) column vector

\(\Pi\) is the \(n \times n\) matrix of mileage transition probabilities

\(U(\cdot)\) is a column-vector of costs for all points in the state space, conditional on decision

\(L(\cdot,\cdot)\) is the logsum function returning an \(n \times 1\) vector

notation \(\bullet[i]\) denotes the \(i\)-th element a vector

Implementation of Fréchet derivative#

Finite approximation of the Bellman operator is

Fréchet derivative w.r.t. \(EV\) is then given by \(n \times n\) matrix

Differentiating the logsum function w.r.t. a scalar#

the logsum function \(L(w_1,w_2) = \log\big[ \exp(w_1) + \exp(w_2) \big]\)

(\(L(w_1,w_2)\) is the expectation of maximum of \(w_i + \varepsilon_i\), where \(\varepsilon_1, \varepsilon_2\) are distributed independently with type 1 extreme value distribution)

let \(p_i = {\exp(w_i) \over \exp(w_1) + \exp(w_2)}\) denote the corresponding choice probabilities

Differentiating the logsum function w.r.t. \(EV[i],\;i>0\)#

where \(P[i]\) is a shortcut notation for probability of keeping \(P(0|x[i])\) at state point \(i\)

Differentiating the logsum function w.r.t. \(EV[0]\)#

where \(\bar{P}[i]\) is a shortcut notation for probability of replacing \(P(1|x[i])\) at state point \(i\)

Matrix notation for the Fréchet derivative#

NK iterations algorithm#

Initialize value function at \(EV_0\) (starting values matter!)

Perform the Newton-Kantorovich step, computing the policy function along the way of applying the Bellman operator \(\Gamma(\cdot)\)

Repeat until convergence value function space

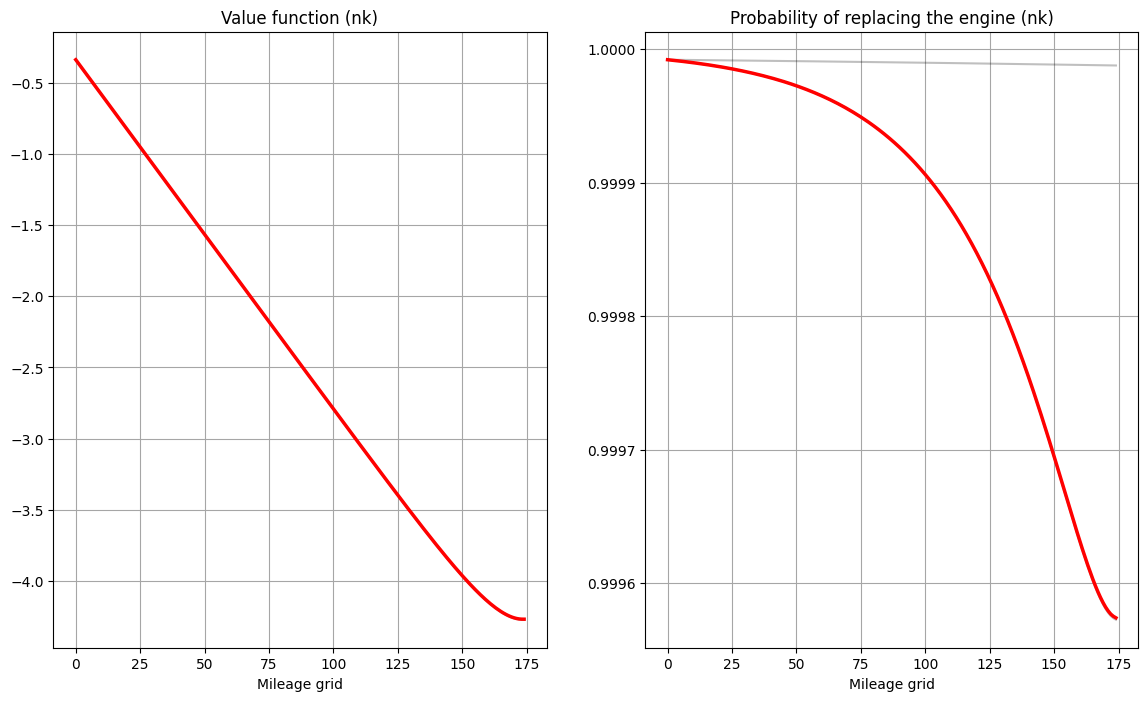

# compare SA, NK

model = zurcher(beta=0.9) # try different value of beta

ev1,pk1 = model.solve_show(maxiter=1500)

ev2,pk2 = model.solve_show(solver='nk')

print()

print('Max diff between value functions is ' ,np.amax(np.abs(ev1-ev2)))

print('Max diff between policy functions is',np.amax(np.abs(pk1-pk2)))

Rust model of bus engine replacement (id=140126209088464) solved with vfi in 123 iterations

Rust model of bus engine replacement (id=140126209088464) solved with nk in 2 iterations

Max diff between value functions is 8.957091488293045e-06

Max diff between policy functions is 9.113154675333135e-12

Does VFI algorithm always converge? What determines the speed of convergence of the VFI algorithm? Does NK algorithm always converge?

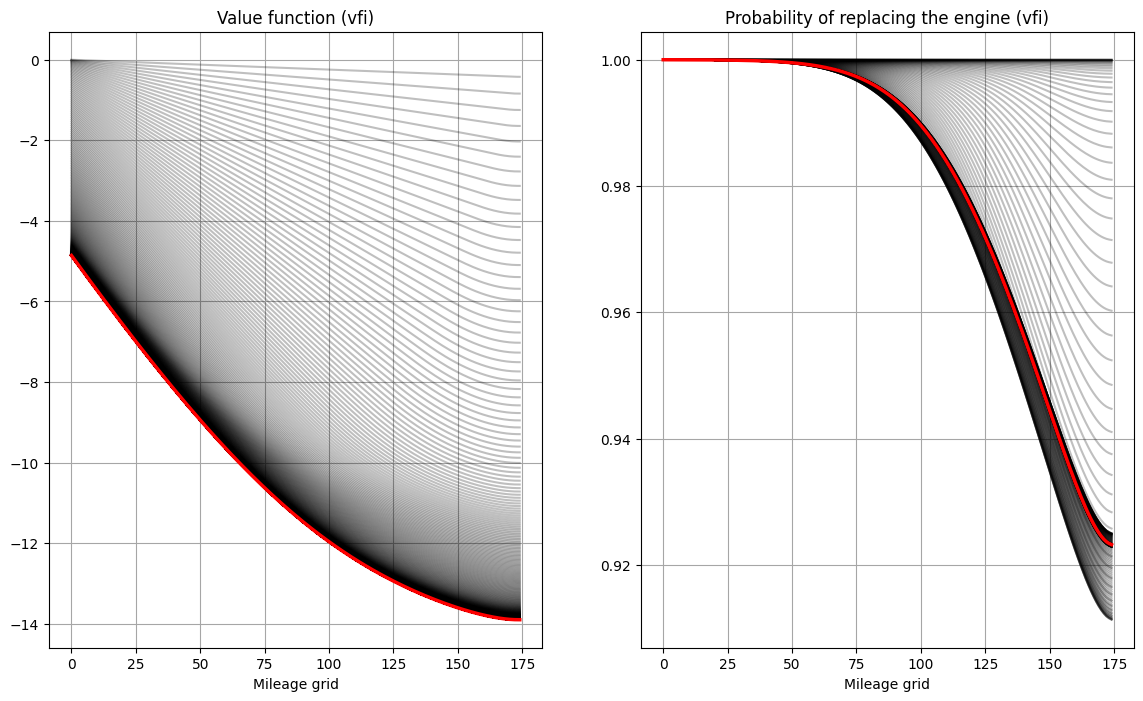

Properties of VFI vs Newton-Kantorovich solution methods#

VFI is globally convergent (Bellman is contraction mappint \(\Rightarrow\) single fixed point)

VFI convergence rate is \(\beta\), very slow in approaching the fixed point when \(\beta <1\) is close to one

vs.

Newton-Kantorovich has quadratic convergence rate

Newton-Kantorovich is sensitive to starting point

# compare SA, NK

model = zurcher(beta=0.975)

ev1,pk1 = model.solve_show(maxiter=1500,verbosity=1,plot=False)

ev2,pk2 = model.solve_show(solver='nk',verbosity=1,plot=False)

print()

print('Max diff between value functions is ' ,np.amax(np.abs(ev1-ev2)))

print('Max diff between policy functions is',np.amax(np.abs(pk1-pk2)))

Solver = vfi

----------------------

iter err

----------------------

0 4.2728e-01

1 4.1659e-01

2 4.0617e-01

3 3.9600e-01

4 3.8608e-01

5 3.7640e-01

6 3.6696e-01

7 3.5773e-01

8 3.4873e-01

9 3.3992e-01

10 3.3131e-01

11 3.2289e-01

12 3.1464e-01

13 3.0655e-01

14 2.9862e-01

15 2.9082e-01

16 2.8316e-01

17 2.7562e-01

18 2.6820e-01

19 2.6088e-01

20 2.5366e-01

21 2.4654e-01

22 2.3952e-01

23 2.3258e-01

24 2.2576e-01

25 2.1904e-01

26 2.1242e-01

27 2.0592e-01

28 1.9954e-01

29 1.9331e-01

30 1.8722e-01

31 1.8127e-01

32 1.7549e-01

33 1.6987e-01

34 1.6443e-01

35 1.5916e-01

36 1.5408e-01

37 1.4919e-01

38 1.4448e-01

39 1.3995e-01

40 1.3560e-01

41 1.3143e-01

42 1.2743e-01

43 1.2360e-01

44 1.1992e-01

45 1.1639e-01

46 1.1300e-01

47 1.0975e-01

48 1.0662e-01

49 1.0361e-01

50 1.0072e-01

51 9.7925e-02

52 9.5235e-02

53 9.2641e-02

54 9.0134e-02

55 8.7710e-02

56 8.5366e-02

57 8.3101e-02

58 8.0904e-02

59 7.8777e-02

60 7.6716e-02

61 7.4714e-02

62 7.2777e-02

63 7.0891e-02

64 6.9065e-02

65 6.7287e-02

66 6.5563e-02

67 6.3884e-02

68 6.2255e-02

69 6.0666e-02

70 5.9125e-02

71 5.7622e-02

72 5.6162e-02

73 5.4739e-02

74 5.3355e-02

75 5.2007e-02

76 5.0692e-02

77 4.9415e-02

78 4.8168e-02

79 4.6956e-02

80 4.5773e-02

81 4.4621e-02

82 4.3499e-02

83 4.2404e-02

84 4.1339e-02

85 4.0300e-02

86 3.9287e-02

87 3.8302e-02

88 3.7338e-02

89 3.6402e-02

90 3.5488e-02

91 3.4596e-02

92 3.3729e-02

93 3.2882e-02

94 3.2057e-02

95 3.1253e-02

96 3.0468e-02

97 2.9704e-02

98 2.8958e-02

99 2.8232e-02

100 2.7524e-02

101 2.6832e-02

102 2.6160e-02

103 2.5504e-02

104 2.4864e-02

105 2.4241e-02

106 2.3632e-02

107 2.3040e-02

108 2.2461e-02

109 2.1898e-02

110 2.1349e-02

111 2.0813e-02

112 2.0291e-02

113 1.9782e-02

114 1.9276e-02

115 1.8768e-02

116 1.8258e-02

117 1.7745e-02

118 1.7229e-02

119 1.6711e-02

120 1.6191e-02

121 1.5669e-02

122 1.5172e-02

123 1.4790e-02

124 1.4409e-02

125 1.4027e-02

126 1.3648e-02

127 1.3272e-02

128 1.2902e-02

129 1.2538e-02

130 1.2183e-02

131 1.1836e-02

132 1.1498e-02

133 1.1171e-02

134 1.0854e-02

135 1.0546e-02

136 1.0249e-02

137 9.9619e-03

138 9.6842e-03

139 9.4159e-03

140 9.1565e-03

141 8.9057e-03

142 8.6634e-03

143 8.4290e-03

144 8.2021e-03

145 7.9827e-03

146 7.7703e-03

147 7.5645e-03

148 7.3651e-03

149 7.1718e-03

150 6.9844e-03

151 6.8026e-03

152 6.6262e-03

153 6.4550e-03

154 6.2886e-03

155 6.1270e-03

156 5.9700e-03

157 5.8173e-03

158 5.6690e-03

159 5.5246e-03

160 5.3843e-03

161 5.2477e-03

162 5.1147e-03

163 4.9853e-03

164 4.8594e-03

165 4.7367e-03

166 4.6173e-03

167 4.5009e-03

168 4.3877e-03

169 4.2772e-03

170 4.1697e-03

171 4.0650e-03

172 3.9629e-03

173 3.8634e-03

174 3.7664e-03

175 3.6720e-03

176 3.5798e-03

177 3.4901e-03

178 3.4026e-03

179 3.3173e-03

180 3.2342e-03

181 3.1532e-03

182 3.0742e-03

183 2.9972e-03

184 2.9221e-03

185 2.8489e-03

186 2.7776e-03

187 2.7080e-03

188 2.6402e-03

189 2.5741e-03

190 2.5096e-03

191 2.4468e-03

192 2.3855e-03

193 2.3258e-03

194 2.2676e-03

195 2.2108e-03

196 2.1555e-03

197 2.1015e-03

198 2.0489e-03

199 1.9976e-03

200 1.9475e-03

201 1.8988e-03

202 1.8513e-03

203 1.8049e-03

204 1.7598e-03

205 1.7157e-03

206 1.6727e-03

207 1.6309e-03

208 1.5900e-03

209 1.5498e-03

210 1.5101e-03

211 1.4710e-03

212 1.4325e-03

213 1.3946e-03

214 1.3573e-03

215 1.3207e-03

216 1.2847e-03

217 1.2493e-03

218 1.2147e-03

219 1.1814e-03

220 1.1518e-03

221 1.1227e-03

222 1.0941e-03

223 1.0660e-03

224 1.0384e-03

225 1.0114e-03

226 9.8509e-04

227 9.5936e-04

228 9.3426e-04

229 9.0979e-04

230 8.8595e-04

231 8.6275e-04

232 8.4016e-04

233 8.1818e-04

234 7.9681e-04

235 7.7602e-04

236 7.5580e-04

237 7.3614e-04

238 7.1703e-04

239 6.9845e-04

240 6.8038e-04

241 6.6281e-04

242 6.4573e-04

243 6.2911e-04

244 6.1296e-04

245 5.9724e-04

246 5.8196e-04

247 5.6709e-04

248 5.5262e-04

249 5.3854e-04

250 5.2485e-04

251 5.1151e-04

252 4.9853e-04

253 4.8590e-04

254 4.7360e-04

255 4.6163e-04

256 4.4997e-04

257 4.3861e-04

258 4.2755e-04

259 4.1678e-04

260 4.0628e-04

261 3.9606e-04

262 3.8610e-04

263 3.7640e-04

264 3.6694e-04

265 3.5773e-04

266 3.4875e-04

267 3.4000e-04

268 3.3147e-04

269 3.2316e-04

270 3.1506e-04

271 3.0717e-04

272 2.9947e-04

273 2.9197e-04

274 2.8466e-04

275 2.7753e-04

276 2.7058e-04

277 2.6381e-04

278 2.5720e-04

279 2.5077e-04

280 2.4449e-04

281 2.3837e-04

282 2.3241e-04

283 2.2659e-04

284 2.2092e-04

285 2.1539e-04

286 2.1001e-04

287 2.0475e-04

288 1.9963e-04

289 1.9463e-04

290 1.8976e-04

291 1.8502e-04

292 1.8039e-04

293 1.7588e-04

294 1.7148e-04

295 1.6719e-04

296 1.6300e-04

297 1.5893e-04

298 1.5495e-04

299 1.5107e-04

300 1.4729e-04

301 1.4361e-04

302 1.4002e-04

303 1.3651e-04

304 1.3310e-04

305 1.2977e-04

306 1.2652e-04

307 1.2336e-04

308 1.2027e-04

309 1.1725e-04

310 1.1429e-04

311 1.1139e-04

312 1.0855e-04

313 1.0576e-04

314 1.0304e-04

315 1.0038e-04

316 9.7771e-05

317 9.5223e-05

318 9.2731e-05

319 9.0297e-05

320 8.8017e-05

321 8.5809e-05

322 8.3648e-05

323 8.1535e-05

324 7.9470e-05

325 7.7452e-05

326 7.5482e-05

327 7.3560e-05

328 7.1686e-05

329 6.9858e-05

330 6.8077e-05

331 6.6341e-05

332 6.4649e-05

333 6.3001e-05

334 6.1396e-05

335 5.9833e-05

336 5.8310e-05

337 5.6828e-05

338 5.5384e-05

339 5.3977e-05

340 5.2608e-05

341 5.1274e-05

342 4.9975e-05

343 4.8710e-05

344 4.7478e-05

345 4.6278e-05

346 4.5109e-05

347 4.3971e-05

348 4.2862e-05

349 4.1781e-05

350 4.0729e-05

351 3.9703e-05

352 3.8704e-05

353 3.7731e-05

354 3.6783e-05

355 3.5858e-05

356 3.4958e-05

357 3.4080e-05

358 3.3225e-05

359 3.2391e-05

360 3.1579e-05

361 3.0787e-05

362 3.0015e-05

363 2.9263e-05

364 2.8530e-05

365 2.7815e-05

366 2.7119e-05

367 2.6440e-05

368 2.5778e-05

369 2.5132e-05

370 2.4503e-05

371 2.3890e-05

372 2.3292e-05

373 2.2709e-05

374 2.2141e-05

375 2.1587e-05

376 2.1047e-05

377 2.0521e-05

378 2.0007e-05

379 1.9507e-05

380 1.9019e-05

381 1.8543e-05

382 1.8080e-05

383 1.7627e-05

384 1.7187e-05

385 1.6757e-05

386 1.6338e-05

387 1.5929e-05

388 1.5531e-05

389 1.5142e-05

390 1.4764e-05

391 1.4394e-05

392 1.4034e-05

393 1.3684e-05

394 1.3341e-05

395 1.3008e-05

396 1.2682e-05

397 1.2365e-05

398 1.2056e-05

399 1.1755e-05

400 1.1461e-05

401 1.1174e-05

402 1.0895e-05

403 1.0622e-05

404 1.0357e-05

405 1.0098e-05

406 9.8451e-06

407 9.5988e-06

408 9.3588e-06

409 9.1248e-06

410 8.8962e-06

411 8.6729e-06

412 8.4547e-06

413 8.2416e-06

414 8.0334e-06

415 7.8301e-06

416 7.6316e-06

417 7.4378e-06

418 7.2486e-06

419 7.0639e-06

420 6.8837e-06

421 6.7106e-06

422 6.5426e-06

423 6.3786e-06

424 6.2185e-06

425 6.0622e-06

426 5.9097e-06

427 5.7609e-06

428 5.6158e-06

429 5.4743e-06

430 5.3363e-06

431 5.2018e-06

432 5.0707e-06

433 4.9429e-06

434 4.8183e-06

435 4.6969e-06

436 4.5786e-06

437 4.4633e-06

438 4.3509e-06

439 4.2414e-06

440 4.1347e-06

441 4.0307e-06

442 3.9294e-06

443 3.8306e-06

444 3.7344e-06

445 3.6406e-06

446 3.5491e-06

447 3.4600e-06

448 3.3732e-06

449 3.2886e-06

450 3.2061e-06

451 3.1257e-06

452 3.0473e-06

453 2.9709e-06

454 2.8965e-06

455 2.8239e-06

456 2.7531e-06

457 2.6842e-06

458 2.6170e-06

459 2.5514e-06

460 2.4876e-06

461 2.4253e-06

462 2.3646e-06

463 2.3054e-06

464 2.2477e-06

465 2.1915e-06

466 2.1366e-06

467 2.0832e-06

468 2.0311e-06

469 1.9803e-06

470 1.9307e-06

471 1.8824e-06

472 1.8354e-06

473 1.7895e-06

474 1.7447e-06

475 1.7011e-06

476 1.6585e-06

477 1.6171e-06

478 1.5766e-06

479 1.5372e-06

480 1.4988e-06

481 1.4613e-06

482 1.4247e-06

483 1.3891e-06

484 1.3544e-06

485 1.3205e-06

486 1.2875e-06

487 1.2553e-06

488 1.2239e-06

489 1.1933e-06

490 1.1635e-06

491 1.1344e-06

492 1.1060e-06

493 1.0784e-06

494 1.0514e-06

495 1.0251e-06

496 9.9949e-07

Rust model of bus engine replacement (id=140125528747024) solved with vfi in 496 iterations

Solver = nk

----------------------

iter err

----------------------

0 1.7085e+01

1 1.6466e+00

2 1.0015e+00

3 4.7225e-01

4 8.3180e-02

5 2.1647e-03

6 1.4257e-06

7 6.1817e-13

Rust model of bus engine replacement (id=140125528747024) solved with nk in 7 iterations

Max diff between value functions is 3.884109376883771e-05

Max diff between policy functions is 2.4160655698324263e-09

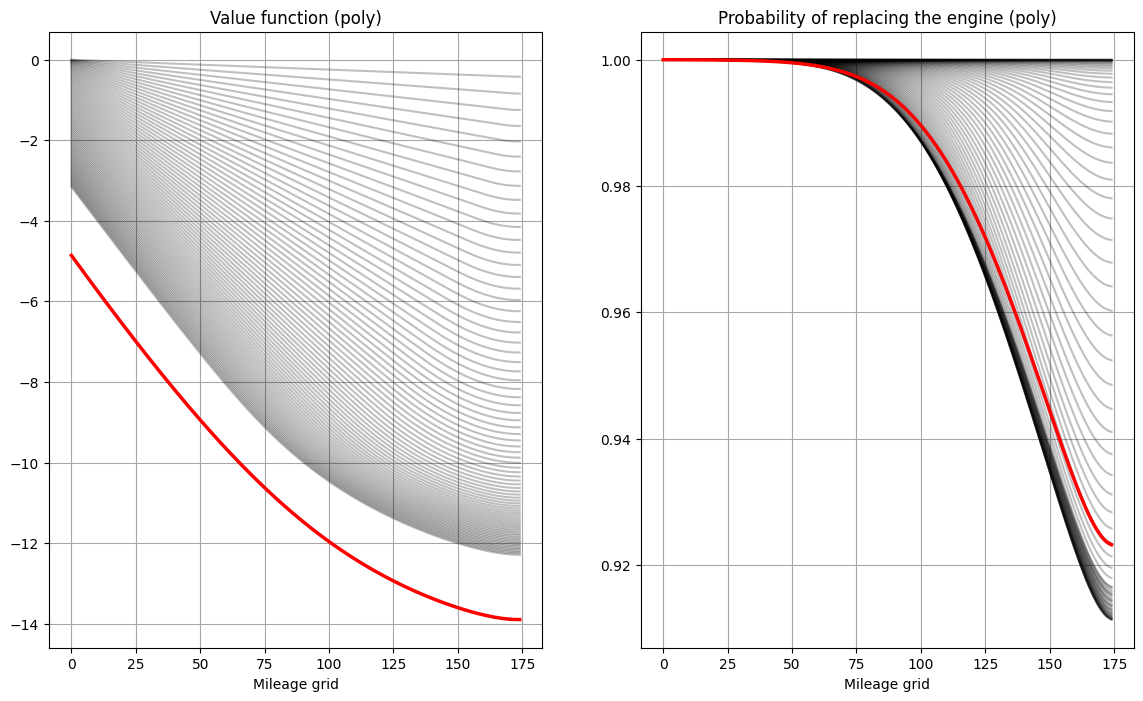

Poly-algorithm#

NK method may not be convergent at the initial point

Successive apprizimataion (SA) iterations, however, are always convergent

Poly algorithm is combination of SA and NK:

Start with SA iterations

At approximately optimal time switch to NK iterations

When to switch to NK iterations?#

Suppose \(EV_{k-1} = {EV}^\star + C\) (where \({EV}^\star\) is the fixed point)

Then the ratio of two errors \(\frac{err_{k+1}}{err_{k}} = \beta\) when the current approximation is a constant away from the fixed point.

NK iteration will immediately “strip away” the constant

Thus, switch to NK iteration when \(\frac{err_{k+1}}{err_{k}}\) is close to \(\beta\)

# when to switch from SA to NK

model = zurcher(beta=0.975)

model.solve_show(maxiter=1500,verbosity=2,plot=False);

Solver = vfi

------------------------------------------

iter err err(i)/err(i-1)

------------------------------------------

0 sa 4.2728e-01 0.427277667463

1 sa 4.1659e-01 0.974985156037

2 sa 4.0617e-01 0.974977898685

3 sa 3.9600e-01 0.974967530759

4 sa 3.8608e-01 0.974952914413

5 sa 3.7640e-01 0.974932594839

6 sa 3.6696e-01 0.974905009462

7 sa 3.5773e-01 0.974867836074

8 sa 3.4873e-01 0.974819168371

9 sa 3.3992e-01 0.974756037945

10 sa 3.3131e-01 0.974674240553

11 sa 3.2289e-01 0.974575376329

12 sa 3.1464e-01 0.974446349105

13 sa 3.0655e-01 0.974300520436

14 sa 2.9862e-01 0.974112724869

15 sa 2.9082e-01 0.973904920662

16 sa 2.8316e-01 0.973656096106

17 sa 2.7562e-01 0.973365083189

18 sa 2.6820e-01 0.973069628117

19 sa 2.6088e-01 0.972704596040

20 sa 2.5366e-01 0.972323312562

21 sa 2.4654e-01 0.971949651340

22 sa 2.3952e-01 0.971507821865

23 sa 2.3258e-01 0.971044307238

24 sa 2.2576e-01 0.970670088415

25 sa 2.1904e-01 0.970230588772

26 sa 2.1242e-01 0.969791221048

27 sa 2.0592e-01 0.969373726567

28 sa 1.9954e-01 0.969042250559

29 sa 1.9331e-01 0.968766942633

30 sa 1.8722e-01 0.968482332963

31 sa 1.8127e-01 0.968253922437

32 sa 1.7549e-01 0.968087699003

33 sa 1.6987e-01 0.967987211283

34 sa 1.6443e-01 0.967953475795

35 sa 1.5916e-01 0.967985087030

36 sa 1.5408e-01 0.968078487981

37 sa 1.4919e-01 0.968228349290

38 sa 1.4448e-01 0.968428005047

39 sa 1.3995e-01 0.968669899577

40 sa 1.3560e-01 0.968946009560

41 sa 1.3143e-01 0.969248216930

42 sa 1.2743e-01 0.969568618533

43 sa 1.2360e-01 0.969899767409

44 sa 1.1992e-01 0.970234847204

45 sa 1.1639e-01 0.970567785770

46 sa 1.1300e-01 0.970893316552

47 sa 1.0975e-01 0.971206997381

48 sa 1.0662e-01 0.971505196210

49 sa 1.0361e-01 0.971785052539

50 sa 1.0072e-01 0.972044422082

51 sa 9.7925e-02 0.972281810899

52 sa 9.5235e-02 0.972524397195

53 sa 9.2641e-02 0.972764776593

54 sa 9.0134e-02 0.972944023297

55 sa 8.7710e-02 0.973099593423

56 sa 8.5366e-02 0.973277772047

57 sa 8.3101e-02 0.973464140669

58 sa 8.0904e-02 0.973561203087

59 sa 7.8777e-02 0.973712319965

60 sa 7.6716e-02 0.973842234165

61 sa 7.4714e-02 0.973898288277

62 sa 7.2777e-02 0.974076641241

63 sa 7.0891e-02 0.974091073911

64 sa 6.9065e-02 0.974242653413

65 sa 6.7287e-02 0.974255178775

66 sa 6.5563e-02 0.974379430258

67 sa 6.3884e-02 0.974382651720

68 sa 6.2255e-02 0.974499936526

69 sa 6.0666e-02 0.974477863212

70 sa 5.9125e-02 0.974605561245

71 sa 5.7622e-02 0.974567831503

72 sa 5.6162e-02 0.974675310244

73 sa 5.4739e-02 0.974656991615

74 sa 5.3355e-02 0.974710686210

75 sa 5.2007e-02 0.974735044237

76 sa 5.0692e-02 0.974727589394

77 sa 4.9415e-02 0.974803664879

78 sa 4.8168e-02 0.974755914814

79 sa 4.6956e-02 0.974837929159

80 sa 4.5773e-02 0.974814952630

81 sa 4.4621e-02 0.974822906957

82 sa 4.3499e-02 0.974867882447

83 sa 4.2404e-02 0.974815613596

84 sa 4.1339e-02 0.974899405624

85 sa 4.0300e-02 0.974862755710

86 sa 3.9287e-02 0.974865780117

87 sa 3.8302e-02 0.974906196811

88 sa 3.7338e-02 0.974851231803

89 sa 3.6402e-02 0.974923045769

90 sa 3.5488e-02 0.974891499082

91 sa 3.4596e-02 0.974879387597

92 sa 3.3729e-02 0.974929612245

93 sa 3.2882e-02 0.974873189754

94 sa 3.2057e-02 0.974926474993

95 sa 3.1253e-02 0.974909746507

96 sa 3.0468e-02 0.974877825399

97 sa 2.9704e-02 0.974945056120

98 sa 2.8958e-02 0.974887934600

99 sa 2.8232e-02 0.974919890745

100 sa 2.7524e-02 0.974922606917

101 sa 2.6832e-02 0.974868989623

102 sa 2.6160e-02 0.974956523865

103 sa 2.5504e-02 0.974899132392

104 sa 2.4864e-02 0.974908750625

105 sa 2.4241e-02 0.974932896779

106 sa 2.3632e-02 0.974874944979

107 sa 2.3040e-02 0.974948184810

108 sa 2.2461e-02 0.974908720068

109 sa 2.1898e-02 0.974895759242

110 sa 2.1349e-02 0.974942061979

111 sa 2.0813e-02 0.974884147136

112 sa 2.0291e-02 0.974934615992

113 sa 1.9782e-02 0.974917651285

114 sa 1.9276e-02 0.974442324580

115 sa 1.8768e-02 0.973647615683

116 sa 1.8258e-02 0.972800995844

117 sa 1.7745e-02 0.971899500846

118 sa 1.7229e-02 0.970941912168

119 sa 1.6711e-02 0.969929803275

120 sa 1.6191e-02 0.968868531422

121 sa 1.5669e-02 0.967767969539

122 sa 1.5172e-02 0.968243679246

123 sa 1.4790e-02 0.974879134735

124 sa 1.4409e-02 0.974190667298

125 sa 1.4027e-02 0.973536217403

126 sa 1.3648e-02 0.972954555652

127 sa 1.3272e-02 0.972469171131

128 sa 1.2902e-02 0.972092733821

129 sa 1.2538e-02 0.971814669992

130 sa 1.2183e-02 0.971631827049

131 sa 1.1836e-02 0.971530682590

132 sa 1.1498e-02 0.971501938002

133 sa 1.1171e-02 0.971523275622

134 sa 1.0854e-02 0.971593960300

135 sa 1.0546e-02 0.971696642005

136 sa 1.0249e-02 0.971822242956

137 sa 9.9619e-03 0.971964481885

138 sa 9.6842e-03 0.972123123013

139 sa 9.4159e-03 0.972288457325

140 sa 9.1565e-03 0.972451170140

141 sa 8.9057e-03 0.972614931055

142 sa 8.6634e-03 0.972787714695

143 sa 8.4290e-03 0.972942421442

144 sa 8.2021e-03 0.973087799203

145 sa 7.9827e-03 0.973248816147

146 sa 7.7703e-03 0.973388631869

147 sa 7.5645e-03 0.973518934386

148 sa 7.3651e-03 0.973642278530

149 sa 7.1718e-03 0.973757211091

150 sa 6.9844e-03 0.973862620202

151 sa 6.8026e-03 0.973975204398

152 sa 6.6262e-03 0.974072553480

153 sa 6.4550e-03 0.974152310416

154 sa 6.2886e-03 0.974220630047

155 sa 6.1270e-03 0.974313921035

156 sa 5.9700e-03 0.974376033104

157 sa 5.8173e-03 0.974418139010

158 sa 5.6690e-03 0.974507447966

159 sa 5.5246e-03 0.974532451652

160 sa 5.3843e-03 0.974594759405

161 sa 5.2477e-03 0.974625994714

162 sa 5.1147e-03 0.974670050756

163 sa 4.9853e-03 0.974699222395

164 sa 4.8594e-03 0.974735343938

165 sa 4.7367e-03 0.974754402472

166 sa 4.6173e-03 0.974791571134

167 sa 4.5009e-03 0.974793950853

168 sa 4.3877e-03 0.974839827552

169 sa 4.2772e-03 0.974827574342

170 sa 4.1697e-03 0.974873930177

171 sa 4.0650e-03 0.974866852219

172 sa 3.9629e-03 0.974885637003

173 sa 3.8634e-03 0.974900748083

174 sa 3.7664e-03 0.974889016102

175 sa 3.6720e-03 0.974930246333

176 sa 3.5798e-03 0.974912175141

177 sa 3.4901e-03 0.974929779093

178 sa 3.4026e-03 0.974937031454

179 sa 3.3173e-03 0.974919583096

180 sa 3.2342e-03 0.974959317818

181 sa 3.1532e-03 0.974939050705

182 sa 3.0742e-03 0.974946267103

183 sa 2.9972e-03 0.974958716208

184 sa 2.9221e-03 0.974937686755

185 sa 2.8489e-03 0.974968002466

186 sa 2.7776e-03 0.974955531852

187 sa 2.7080e-03 0.974947950285

188 sa 2.6402e-03 0.974972440676

189 sa 2.5741e-03 0.974950600775

190 sa 2.5096e-03 0.974964742320

191 sa 2.4468e-03 0.974966597119

192 sa 2.3855e-03 0.974944523624

193 sa 2.3258e-03 0.974979946653

194 sa 2.2676e-03 0.974959927591

195 sa 2.2108e-03 0.974956824710

196 sa 2.1555e-03 0.974975050782

197 sa 2.1015e-03 0.974952748669

198 sa 2.0489e-03 0.974970626298

199 sa 1.9976e-03 0.974967607220

200 sa 1.9475e-03 0.974946933934

201 sa 1.8988e-03 0.974982348121

202 sa 1.8513e-03 0.974959968761

203 sa 1.8049e-03 0.974960184441

204 sa 1.7598e-03 0.974974599124

205 sa 1.7157e-03 0.974952232588

206 sa 1.6727e-03 0.974973271839

207 sa 1.6309e-03 0.974966797136

208 sa 1.5900e-03 0.974944457877

209 sa 1.5498e-03 0.974701813459

210 sa 1.5101e-03 0.974407167329

211 sa 1.4710e-03 0.974115684454

212 sa 1.4325e-03 0.973828205860

213 sa 1.3946e-03 0.973545598478

214 sa 1.3573e-03 0.973268752637

215 sa 1.3207e-03 0.972998579033

216 sa 1.2847e-03 0.972736005191

217 sa 1.2493e-03 0.972481971466

218 sa 1.2147e-03 0.972237426466

219 sa 1.1814e-03 0.972637892569

220 sa 1.1518e-03 0.974951217352

221 sa 1.1227e-03 0.974712685540

222 sa 1.0941e-03 0.974495690997

223 sa 1.0660e-03 0.974309379414

224 sa 1.0384e-03 0.974155843925

225 sa 1.0114e-03 0.974034584402

226 sa 9.8509e-04 0.973943128622

227 sa 9.5936e-04 0.973878016458

228 sa 9.3426e-04 0.973835249669

229 sa 9.0979e-04 0.973810313466

230 sa 8.8595e-04 0.973799322789

231 sa 8.6275e-04 0.973806306198

232 sa 8.4016e-04 0.973819901432

233 sa 8.1818e-04 0.973842632950

234 sa 7.9681e-04 0.973873124110

235 sa 7.7602e-04 0.973908854312

236 sa 7.5580e-04 0.973947234360

237 sa 7.3614e-04 0.973991048398

238 sa 7.1703e-04 0.974038393604

239 sa 6.9845e-04 0.974081910809

240 sa 6.8038e-04 0.974130880197

241 sa 6.6281e-04 0.974180726391

242 sa 6.4573e-04 0.974225575143

243 sa 6.2911e-04 0.974271409578

244 sa 6.1296e-04 0.974317441612

245 sa 5.9724e-04 0.974362939953

246 sa 5.8196e-04 0.974407238036

247 sa 5.6709e-04 0.974449740270

248 sa 5.5262e-04 0.974489926504

249 sa 5.3854e-04 0.974527354757

250 sa 5.2485e-04 0.974561662037

251 sa 5.1151e-04 0.974592563528

252 sa 4.9853e-04 0.974631024082

253 sa 4.8590e-04 0.974662289690

254 sa 4.7360e-04 0.974684544705

255 sa 4.6163e-04 0.974714660409

256 sa 4.4997e-04 0.974742145135

257 sa 4.3861e-04 0.974754985448

258 sa 4.2755e-04 0.974790768687

259 sa 4.1678e-04 0.974799416103

260 sa 4.0628e-04 0.974824563740

261 sa 3.9606e-04 0.974836188541

262 sa 3.8610e-04 0.974855080558

263 sa 3.7640e-04 0.974865638018

264 sa 3.6694e-04 0.974882285101

265 sa 3.5773e-04 0.974888311180

266 sa 3.4875e-04 0.974906305207

267 sa 3.4000e-04 0.974904887585

268 sa 3.3147e-04 0.974927379532

269 sa 3.2316e-04 0.974921704812

270 sa 3.1506e-04 0.974940221529

271 sa 3.0717e-04 0.974939291043

272 sa 2.9947e-04 0.974945397968

273 sa 2.9197e-04 0.974954676866

274 sa 2.8466e-04 0.974947249740

275 sa 2.7753e-04 0.974967906716

276 sa 2.7058e-04 0.974960170072

277 sa 2.6381e-04 0.974965566496

278 sa 2.5720e-04 0.974971658481

279 sa 2.5077e-04 0.974963024558

280 sa 2.4449e-04 0.974979998312

281 sa 2.3837e-04 0.974972972412

282 sa 2.3241e-04 0.974973210964

283 sa 2.2659e-04 0.974982088032

284 sa 2.2092e-04 0.974972721681

285 sa 2.1539e-04 0.974983054544

286 sa 2.1001e-04 0.974980967234

287 sa 2.0475e-04 0.974973885721

288 sa 1.9963e-04 0.974988777173

289 sa 1.9463e-04 0.974979011792

290 sa 1.8976e-04 0.974981363651

291 sa 1.8502e-04 0.974986371953

292 sa 1.8039e-04 0.974976503352

293 sa 1.7588e-04 0.974988066184

294 sa 1.7148e-04 0.974983567316

295 sa 1.6719e-04 0.974977415257

296 sa 1.6300e-04 0.974990508541

297 sa 1.5893e-04 0.974980516950

298 sa 1.5495e-04 0.974983445115

299 sa 1.5107e-04 0.974987321892

300 sa 1.4729e-04 0.974977324120

301 sa 1.4361e-04 0.974989268673

302 sa 1.4002e-04 0.974984043735

303 sa 1.3651e-04 0.974978281751

304 sa 1.3310e-04 0.974990744926

305 sa 1.2977e-04 0.974980721646

306 sa 1.2652e-04 0.974983971571

307 sa 1.2336e-04 0.974987383906

308 sa 1.2027e-04 0.974977384312

309 sa 1.1725e-04 0.974875056333

310 sa 1.1429e-04 0.974744215187

311 sa 1.1139e-04 0.974616422929

312 sa 1.0855e-04 0.974492048888

313 sa 1.0576e-04 0.974371450721

314 sa 1.0304e-04 0.974254973765

315 sa 1.0038e-04 0.974142950385

316 sa 9.7771e-05 0.974035698997

317 sa 9.5223e-05 0.973933523461

318 sa 9.2731e-05 0.973836711944

319 sa 9.0297e-05 0.973745536287

320 sa 8.8017e-05 0.974748877580

321 sa 8.5809e-05 0.974916964920

322 sa 8.3648e-05 0.974820564967

323 sa 8.1535e-05 0.974736522727

324 sa 7.9470e-05 0.974666699449

325 sa 7.7452e-05 0.974611257557

326 sa 7.5482e-05 0.974568879461

327 sa 7.3560e-05 0.974537709997

328 sa 7.1686e-05 0.974517191868

329 sa 6.9858e-05 0.974504438088

330 sa 6.8077e-05 0.974497754086

331 sa 6.6341e-05 0.974499171335

332 sa 6.4649e-05 0.974503426614

333 sa 6.3001e-05 0.974511835365

334 sa 6.1396e-05 0.974523669667

335 sa 5.9833e-05 0.974537878310

336 sa 5.8310e-05 0.974553390308

337 sa 5.6828e-05 0.974570639190

338 sa 5.5384e-05 0.974590704368

339 sa 5.3977e-05 0.974607695532

340 sa 5.2608e-05 0.974629235210

341 sa 5.1274e-05 0.974648523273

342 sa 4.9975e-05 0.974667072961

343 sa 4.8710e-05 0.974686122470

344 sa 4.7478e-05 0.974706543445

345 sa 4.6278e-05 0.974725349555

346 sa 4.5109e-05 0.974742998273

347 sa 4.3971e-05 0.974759965837

348 sa 4.2862e-05 0.974777732797

349 sa 4.1781e-05 0.974794547250

350 sa 4.0729e-05 0.974809469597

351 sa 3.9703e-05 0.974823048570

352 sa 3.8704e-05 0.974835186022

353 sa 3.7731e-05 0.974850569365

354 sa 3.6783e-05 0.974863240947

355 sa 3.5858e-05 0.974871776619

356 sa 3.4958e-05 0.974884718378

357 sa 3.4080e-05 0.974894807298

358 sa 3.3225e-05 0.974900520881

359 sa 3.2391e-05 0.974914706604

360 sa 3.1579e-05 0.974917201673

361 sa 3.0787e-05 0.974929390832

362 sa 3.0015e-05 0.974931820494

363 sa 2.9263e-05 0.974941752259

364 sa 2.8530e-05 0.974943509456

365 sa 2.7815e-05 0.974952799030

366 sa 2.7119e-05 0.974952490742

367 sa 2.6440e-05 0.974962584441

368 sa 2.5778e-05 0.974960488331

369 sa 2.5132e-05 0.974969749003

370 sa 2.4503e-05 0.974968736497

371 sa 2.3890e-05 0.974973471621

372 sa 2.3292e-05 0.974975970608

373 sa 2.2709e-05 0.974975446428

374 sa 2.2141e-05 0.974982326421

375 sa 2.1587e-05 0.974979168924

376 sa 2.1047e-05 0.974984727591

377 sa 2.0521e-05 0.974984536114

378 sa 2.0007e-05 0.974983663651

379 sa 1.9507e-05 0.974989325297

380 sa 1.9019e-05 0.974985684984

381 sa 1.8543e-05 0.974989664848

382 sa 1.8080e-05 0.974989848430

383 sa 1.7627e-05 0.974986783475

384 sa 1.7187e-05 0.974993671493

385 sa 1.6757e-05 0.974989733952

386 sa 1.6338e-05 0.974990900698

387 sa 1.5929e-05 0.974993198699

388 sa 1.5531e-05 0.974989166243

389 sa 1.5142e-05 0.974994353121

390 sa 1.4764e-05 0.974992381581

391 sa 1.4394e-05 0.974990171572

392 sa 1.4034e-05 0.974995478714

393 sa 1.3684e-05 0.974991335108

394 sa 1.3341e-05 0.974992981846

395 sa 1.3008e-05 0.974994307795

396 sa 1.2682e-05 0.974990142156

397 sa 1.2365e-05 0.974995586171

398 sa 1.2056e-05 0.974993034204

399 sa 1.1755e-05 0.974991026724

400 sa 1.1461e-05 0.974995897409

401 sa 1.1174e-05 0.974991700842

402 sa 1.0895e-05 0.974993466325

403 sa 1.0622e-05 0.974994527417

404 sa 1.0357e-05 0.974990336119

405 sa 1.0098e-05 0.974995853837

406 sa 9.8451e-06 0.974993139460

407 sa 9.5988e-06 0.974991226148

408 sa 9.3588e-06 0.974995941513

409 sa 9.1248e-06 0.974991744972

410 sa 8.8962e-06 0.974952086779

411 sa 8.6729e-06 0.974897239543

412 sa 8.4547e-06 0.974843773186

413 sa 8.2416e-06 0.974791859842

414 sa 8.0334e-06 0.974741662353

415 sa 7.8301e-06 0.974693336652

416 sa 7.6316e-06 0.974647028512

417 sa 7.4378e-06 0.974602874387

418 sa 7.2486e-06 0.974561002135

419 sa 7.0639e-06 0.974521527982

420 sa 6.8837e-06 0.974484559398

421 sa 6.7106e-06 0.974862258490

422 sa 6.5426e-06 0.974968541685

423 sa 6.3786e-06 0.974928564812

424 sa 6.2185e-06 0.974893723100

425 sa 6.0622e-06 0.974864726699

426 sa 5.9097e-06 0.974841612498

427 sa 5.7609e-06 0.974823964495

428 sa 5.6158e-06 0.974811152919

429 sa 5.4743e-06 0.974802430521

430 sa 5.3363e-06 0.974796974453

431 sa 5.2018e-06 0.974794869492

432 sa 5.0707e-06 0.974794490290

433 sa 4.9429e-06 0.974797033615

434 sa 4.8183e-06 0.974800415017

435 sa 4.6969e-06 0.974805178416

436 sa 4.5786e-06 0.974810893096

437 sa 4.4633e-06 0.974817264179

438 sa 4.3509e-06 0.974825358543

439 sa 4.2414e-06 0.974832167543

440 sa 4.1347e-06 0.974840569504

441 sa 4.0307e-06 0.974847640799

442 sa 3.9294e-06 0.974857102712

443 sa 3.8306e-06 0.974864617627

444 sa 3.7344e-06 0.974872323954

445 sa 3.6406e-06 0.974880090544

446 sa 3.5491e-06 0.974887798062

447 sa 3.4600e-06 0.974895335867

448 sa 3.3732e-06 0.974902602691

449 sa 3.2886e-06 0.974909513586

450 sa 3.2061e-06 0.974915991714

451 sa 3.1257e-06 0.974921975562

452 sa 3.0473e-06 0.974927413659

453 sa 2.9709e-06 0.974933545203

454 sa 2.8965e-06 0.974939499722

455 sa 2.8239e-06 0.974943545370

456 sa 2.7531e-06 0.974947788886

457 sa 2.6842e-06 0.974953691624

458 sa 2.6170e-06 0.974956190549

459 sa 2.5514e-06 0.974960889046

460 sa 2.4876e-06 0.974964175414

461 sa 2.4253e-06 0.974966771135

462 sa 2.3646e-06 0.974970885422

463 sa 2.3054e-06 0.974972127756

464 sa 2.2477e-06 0.974976358062

465 sa 2.1915e-06 0.974976941136

466 sa 2.1366e-06 0.974980672128

467 sa 2.0832e-06 0.974981221772

468 sa 2.0311e-06 0.974983926019

469 sa 1.9803e-06 0.974984999609

470 sa 1.9307e-06 0.974986236821

471 sa 1.8824e-06 0.974988321579

472 sa 1.8354e-06 0.974987723963

473 sa 1.7895e-06 0.974991237980

474 sa 1.7447e-06 0.974990065522

475 sa 1.7011e-06 0.974992246161

476 sa 1.6585e-06 0.974992515500

477 sa 1.6171e-06 0.974992260259

478 sa 1.5766e-06 0.974994683587

479 sa 1.5372e-06 0.974993282563

480 sa 1.4988e-06 0.974995164061

481 sa 1.4613e-06 0.974995140248

482 sa 1.4247e-06 0.974994257800

483 sa 1.3891e-06 0.974996825793

484 sa 1.3544e-06 0.974995275443

485 sa 1.3205e-06 0.974996190164

486 sa 1.2875e-06 0.974996774726

487 sa 1.2553e-06 0.974995175010

488 sa 1.2239e-06 0.974997768072

489 sa 1.1933e-06 0.974996541148

490 sa 1.1635e-06 0.974996168463

491 sa 1.1344e-06 0.974997844581

492 sa 1.1060e-06 0.974996184116

493 sa 1.0784e-06 0.974997391628

494 sa 1.0514e-06 0.974997419209

495 sa 1.0251e-06 0.974995746383

496 sa 9.9949e-07 0.974998498597

Rust model of bus engine replacement (id=140125384780368) solved with vfi in 496 iterations

# compare SA, NK and polyalgorithm

m = zurcher(beta=0.975)

ev,pk = m.solve_show(tol=1e-10,maxiter=1500)

ev,pk = m.solve_show(tol=1e-10,solver='nk',plot=False)

polyset = {'sa_min':10,

'sa_max':100,

'switch_tol':0.000215,

}

ev,pk = m.solve_show(tol=1e-10,verbosity=2,solver='poly',**polyset)

Rust model of bus engine replacement (id=140125384888272) solved with vfi in 860 iterations

Rust model of bus engine replacement (id=140125384888272) solved with nk in 7 iterations

Solver = poly

------------------------------------------

iter err err(i)/err(i-1)

------------------------------------------

0 sa 4.2728e-01 0.427277667463

1 sa 4.1659e-01 0.974985156037

2 sa 4.0617e-01 0.974977898685

3 sa 3.9600e-01 0.974967530759

4 sa 3.8608e-01 0.974952914413

5 sa 3.7640e-01 0.974932594839

6 sa 3.6696e-01 0.974905009462

7 sa 3.5773e-01 0.974867836074

8 sa 3.4873e-01 0.974819168371

9 sa 3.3992e-01 0.974756037945

10 sa 3.3131e-01 0.974674240553

11 sa 3.2289e-01 0.974575376329

12 sa 3.1464e-01 0.974446349105

13 sa 3.0655e-01 0.974300520436

14 sa 2.9862e-01 0.974112724869

15 sa 2.9082e-01 0.973904920662

16 sa 2.8316e-01 0.973656096106

17 sa 2.7562e-01 0.973365083189

18 sa 2.6820e-01 0.973069628117

19 sa 2.6088e-01 0.972704596040

20 sa 2.5366e-01 0.972323312562

21 sa 2.4654e-01 0.971949651340

22 sa 2.3952e-01 0.971507821865

23 sa 2.3258e-01 0.971044307238

24 sa 2.2576e-01 0.970670088415

25 sa 2.1904e-01 0.970230588772

26 sa 2.1242e-01 0.969791221048

27 sa 2.0592e-01 0.969373726567

28 sa 1.9954e-01 0.969042250559

29 sa 1.9331e-01 0.968766942633

30 sa 1.8722e-01 0.968482332963

31 sa 1.8127e-01 0.968253922437

32 sa 1.7549e-01 0.968087699003

33 sa 1.6987e-01 0.967987211283

34 sa 1.6443e-01 0.967953475795

35 sa 1.5916e-01 0.967985087030

36 sa 1.5408e-01 0.968078487981

37 sa 1.4919e-01 0.968228349290

38 sa 1.4448e-01 0.968428005047

39 sa 1.3995e-01 0.968669899577

40 sa 1.3560e-01 0.968946009560

41 sa 1.3143e-01 0.969248216930

42 sa 1.2743e-01 0.969568618533

43 sa 1.2360e-01 0.969899767409

44 sa 1.1992e-01 0.970234847204

45 sa 1.1639e-01 0.970567785770

46 sa 1.1300e-01 0.970893316552

47 sa 1.0975e-01 0.971206997381

48 sa 1.0662e-01 0.971505196210

49 sa 1.0361e-01 0.971785052539

50 sa 1.0072e-01 0.972044422082

51 sa 9.7925e-02 0.972281810899

52 sa 9.5235e-02 0.972524397195

53 sa 9.2641e-02 0.972764776593

54 sa 9.0134e-02 0.972944023297

55 sa 8.7710e-02 0.973099593423

56 sa 8.5366e-02 0.973277772047

57 sa 8.3101e-02 0.973464140669

58 sa 8.0904e-02 0.973561203087

59 sa 7.8777e-02 0.973712319965

60 sa 7.6716e-02 0.973842234165

61 sa 7.4714e-02 0.973898288277

62 sa 7.2777e-02 0.974076641241

63 sa 7.0891e-02 0.974091073911

64 sa 6.9065e-02 0.974242653413

65 sa 6.7287e-02 0.974255178775

66 sa 6.5563e-02 0.974379430258

67 sa 6.3884e-02 0.974382651720

68 sa 6.2255e-02 0.974499936526

69 sa 6.0666e-02 0.974477863212

70 sa 5.9125e-02 0.974605561245

71 sa 5.7622e-02 0.974567831503

72 sa 5.6162e-02 0.974675310244

73 sa 5.4739e-02 0.974656991615

74 sa 5.3355e-02 0.974710686210

75 sa 5.2007e-02 0.974735044237

76 sa 5.0692e-02 0.974727589394

77 nk 1.7465e+00 34.452068933881

78 nk 6.4457e-03 0.003690746725

79 nk 3.5956e-06 0.000557818120

80 nk 1.7195e-12 0.000000478233

Rust model of bus engine replacement (id=140125384888272) solved with poly in 80 iterations

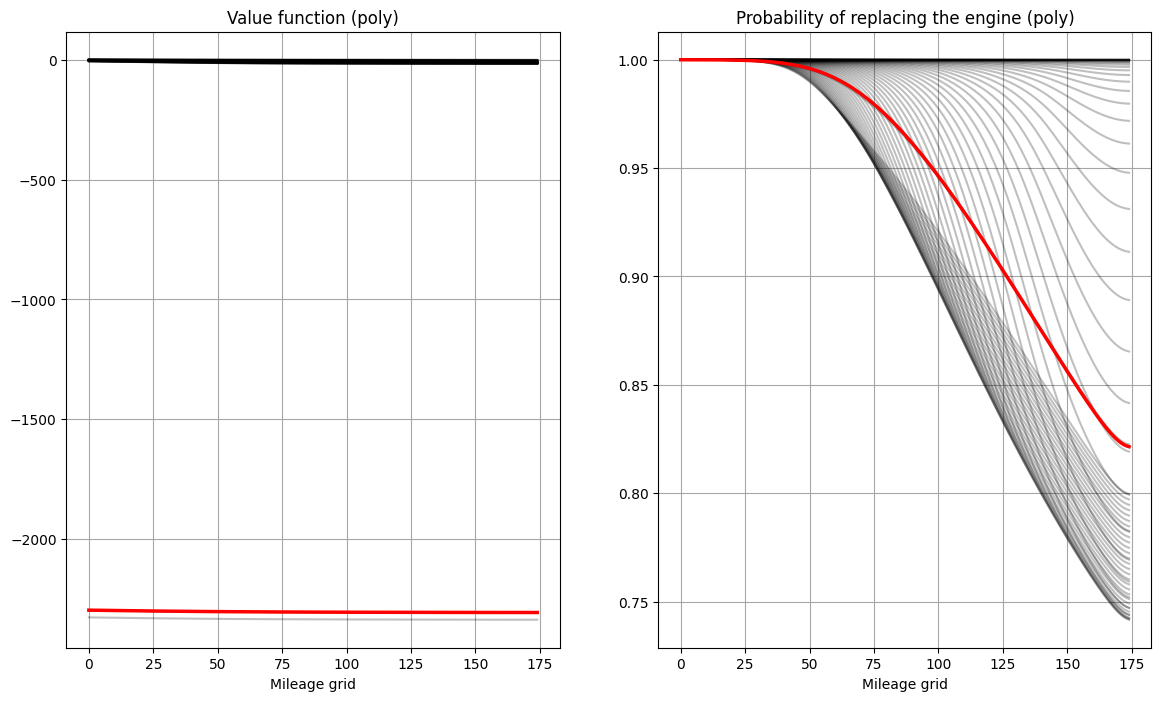

# original parameters from Rust 1987

m = zurcher()

polyset = {'sa_min':10,

'sa_max':100,

'switch_tol':0.0005,

}

ev,pk = m.solve_show(tol=1e-10,solver='poly',**polyset)

# time the solver

%timeit -n 5 -r 10 m.solve_poly(tol=1e-10,**polyset)

Rust model of bus engine replacement (id=140125385141520) solved with poly in 68 iterations

6.32 ms ± 213 μs per loop (mean ± std. dev. of 10 runs, 5 loops each)

Nested fixed point algorithm (NFXP) MLE estimation#

Maximum likelihood estimator is applicable when the model yields a probability distribution for the observable data

Let \(L(x,\theta)\) denote the distribution (pdf) of the observables \(x\) implied by the model with parameter vector \(\theta\)

Let \(Z_n = (z_i,\dots,z_n)\) denote the data, consisting of \(n\) independent observations (random sample)

MLE and MSM are two main estimation methods for dynamic economic models

Likelihood function#

If \(L(x,\theta)\) is a discrete distribution then \(L(x,\theta)\) computed at the data point gives the probability of observing the given data point exactly

If \(L(x,\theta)\) is a continuous distribution then \(L(x,\theta)\) computed at the data point is analogous to the probability of observing the given data point

Likelihood function is

Definition of MLE estimator#

\(\theta \in \Theta\) is parameter space

\(Z_n = (z_1,\dots,z_n)\) is observed data

Asymptotic properties of MLE#

Consistency: \(\; \hat{\theta}_{MLE} \xrightarrow{\mathcal{P}} \theta_0\)

Asymptotic normality: \(\; \sqrt{n} ( \hat{\theta}_{MLE} - \theta_0 ) \xrightarrow{\mathcal{D}} N(0,\mathcal{I}(\theta_0)^{-1})\)

Asymptotic efficiency: MLE approaches the smallest possible variance (Cramér-Rao bound) for unbiased estimator when \(n \rightarrow \infty\)

Functional invariance: MLE of \(\gamma_0 = g(\theta_0)\) is given by \(g(\hat{\theta}_{MLE})\) if \(g(\cdot)\) is continuously differentiable function

Asymptotic variance of MLE estimator#

variance is given by the inverse of the Fisher information, computed from pdf \(L(x,\theta_0)\)

alternatively, Fisher information matrix can be computed from log-likelihood function \(\ell_n(\theta_0)\) of \(n\) i.i.d. random variables in place of data \(Z_n\)

Estimating Fisher information#

Fisher information depends on model pdf \(L(x,\theta_0)\), hardly computable

Can be consistently estimated due to LLN: \(-\tfrac{1}{n} \sum_i^n \frac{\partial^2}{\partial\theta \partial\theta} \ell(z_i,\theta) \xrightarrow{P} \mathcal{I}(\theta_0)\)

This gives observed Fisher information

Plugging in the estimate \(\hat{\theta} = \hat{\theta}_{MLE}\) in we have

Information equality#

similar to the two equivalent definitions for the expected Fisher information, for the observed Fisher information it holds (assuming \(\theta_0\) in a scalar)

let parameter \(\theta \in \mathbb{R}^K\) be a vector with \(K\) elements

need to adjust both second order derivative and the square of the first order derivative

the square in RHS originates in the calculation of variance of \(\frac{\partial \ell_n(\theta_0)}{\partial\theta}\)

Outer product of gradients#

consider vector random variable \(\tilde{X} = (\tilde{X}_1,\dots,\tilde{X}_K)^{T}\), so \(\tilde{X}\) is column-vector \(K \times 1\)

expectation of \(\tilde{X}\) is given by the \(K \times 1\) vector \(\mathbb{E}\tilde{X}\)

\(K \times K\) variance-covariance matrix \(\Sigma\) of \(\tilde{X}\) is given by the expectation of the outer product \(\otimes\)

variances of the elements of \(\tilde{X}\) are on the main diagonal of \(\Sigma\), whereas the off-diagonal elements contain covariances \(cov(\tilde{X}_i,\tilde{X}_j)\) for all \(i \ne j\)

Information matrix equality#

let \(H(\ell_n(\theta_0)) = \frac{\partial^2}{\partial\theta \partial\theta} \ell_n(\theta_0)\) denote the \(K \times K\) Hessian matrix of \(\ell_n(\theta_0)\)

let \(\nabla f(\theta) = \frac{\partial}{\partial\theta} f(\theta)\) denote the gradient of \(f(\theta)\), \(K \times 1\) vector

let \(\nabla\ell(Z_n, \theta_0) = \big( \frac{\partial}{\partial\theta} \ell(z_1,\theta_0),\dots,\frac{\partial}{\partial\theta} \ell(z_n,\theta_0) \big)\) denote the \(K \times n\) matrix of gradients of \(\ell(z_i,\theta_0)\) stacked for all \(i\)

Then we have the information matrix equality

where parameter \(\theta \in \mathbb{R}^K\) is a vector with \(K\) elements

Summary and conclusion#

\(\theta \in \Theta\) is parameter space

\(Z_n = (z_1,\dots,z_n)\) is observed data

Berndt-Hall-Hall-Hausman (BHHH) algorithm#

information matrix equality gives an approximation of the Hessian of the log-likelihood function \(\ell_n(\theta_0)\)

can be used in quasi-Newton optimization method! \(\Rightarrow\) BHHH algorithm

outer product of gradients result in semi-definite matrix for every \(\theta\) so even if the approximation is not accurate, it will never point Newton iteration in the wrong direction

search of appropriate step size in the direction found by approximated Hessian is part of the algorithm to ensure global convergence

📖 E.K. Berndt, B.H. Hall, R.E. Hall and J.A. Hausman, 1974 “Estimation and inference in nonlinear structural models”

Estimating Zurcher model with NFXP#

for every value of structural parameters \(\theta = (RC,\theta_1,\theta_20,\dots,\theta_20,\beta)\)

we can solve the model fast using NK iterations within the polyalgorithm

based on the solution \(EV_\theta(x,d)\) can form choice probabilities \(P(\text{keep}|x,\theta)\) and \(P(\text{replace}|x,\theta)\)

Next: given the data on mileage (\(x\)) and choices (\(d\)), can form likelihood function \(L(x,d,\theta)\), and proceed with maximum likelihood estimation

Data#

Harold Zurcher’s Maintenance records of 162 busses in 8 groups

Monthly observations of mileage on each bus (odometer reading)

Data on maintenance operations:

Routine periodic maintenance (i.e. brake adjustment)

Replacement or repair at time of failure

Major engine overhaul and/or replacement (the focus of the paper)

Data \((x_{i,t},d_{i,t})\) where \(x_{i,t}\) is discretized mileage (bin indexes), and \(d_{i,t}\) is the observed choice at this mileage for each bus \(i\) in each month \(t\)

Likelihood function#

MLE estimator#

Unconstrained optimiztion, but requires the computation of \(EV_{\theta}\) for each value of parameter \(\theta\)

Nested loop#

Outer loop = Hill-climbing algorithm

Log-likelihood function \(\ell_n(\theta,EV_{\theta})\) is maximized with respect to \(\theta\)

Quasi-Newton algorithm with BHHH approximation of Hessian

Each evaluation of \(\ell_n(\theta,EV_{\theta})\) requires solution for the fixed point \(EV_{\theta}\)

Inner loop = Fixed point algorithm

Solver for the fixed point of the Bellman operator \(EV_{\theta} = \Gamma(EV_{\theta})\)

Successive approximations (VFI) + Newton-Kantorovich iterations in a polyalgorithm

Important details#

Performance: gradient based Newton method to maximize likelihood

Analytical gradients: using implicit function theorem and chain rule for the outer loop, and Fréchet derivative for the inner loop

Use BHHH: outer product of gradient approximation for Hessian

Numerical stability: recenter logsum and choice probabilities (demax trick)

Further info: NFXP manual (see further learning resources)

Analytical gradient of the likelihood function#

straightforward with multiple application of chain rule

the key point is expressing derivatives of the expected value function \(\frac{\partial EV_{\theta}}{\partial \theta}\), which is done with Banach space version of implicit function theorem, applied to the fixed point equation

\(\Gamma_{\theta}(\cdot)\) is the (finite approximation of) Bellman operator in expected function space

\(\frac{\partial \Gamma_{\theta}}{\partial EV_{\theta}}\) is the (finite approximation of) Fréchet derivative

📖 John Rust “NFXP Pocket Guide” Download pdf

References and Additional Resources

📖 Rust [1987] “Optimal Replacement of GMC Bus Engines: An Empirical Model of Harold Zurcher”

📖 John Rust “NFXP Manual”, Version 6, October 2000

Download pdf📖 John Rust “NFXP Pocket Guide”

Download pdf📖 Iskhakov et al. [2016] “Constrained optimization approaches to estimation of structural models: Comment”

📺 Econometric Society Dynamic Structural Econometrics (DSE) lecture by Bertel Schjerning YouTube video

John Rust wiki

Google scholar citing Rust (1987) link

📖 Adda Cooper “Dynamic Economics” pp. 83-85

Note on Information Matrix Equality

pdfMatlab implementation of full solver and Rust estimator (NFXP) link to GitHub repo

ruspyPython package implementing NFXP OpenSourceEconomics/ruspyCompiting ergodic distribution of a Markov chain link